Lies, Damn Lies, and Project Statistics

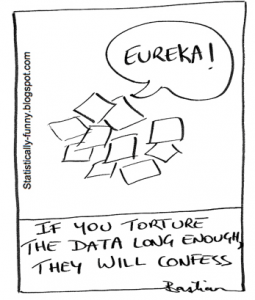

Harvey’s term for torturing the data until it confesses is “p-hacking,” a reference to the p-value, a measure of statistical significance. P-hacking is also known as overfitting, data-mining—or data-snooping, the coinage of Andrew Lo, director of MIT’s Laboratory of Financial Engineering. Says Lo: “The more you search over the past, the more likely it is you are going to find exotic patterns that you happen to like or focus on. Those patterns are least likely to repeat.” Bloomberg Businessweek, April 10, 2017. Lies, damn lies, and financial statistics.

I always tried to stick with simple numbers like averages. I did like the bit more complex notion of an average plus two standard deviations because it often represented 95% of the cases and hence I could make an argument that we would be done or complete by that number. However, statistics always confused people. More than that, people would often crank out their own numbers, with only a vague notion of what they were doing, and then show them as ”proof” that something was or was not possible. When everyone was throwing up hacked numbers on Power Point slides then no numbers were believable.

With simple averages, I could illustrate what we were actually seeing. If we averaged nine months to deliver a software release, I could show the last few actual projects and management could see how the average matched the actual numbers well. These simple averages matched what we were seeing and hence helped to train our intuition.

For more see Your Average Is Powerful

What project numbers are you paying attention to and do they match well with the reality you are seeing in your project?

Harvey’s term for torturing the data until it confesses is “p-hacking,” a reference to the p-value, a measure of statistical significance. P-hacking is also known as overfitting, data-mining—or data-snooping, the coinage of Andrew Lo, director of MIT’s Laboratory of Financial Engineering. Says Lo: “The more you search over the past, the more likely it is you are going to find exotic patterns that you happen to like or focus on. Those patterns are least likely to repeat.” Bloomberg Businessweek, April 10, 2017. Lies, damn lies, and financial statistics.

Harvey’s term for torturing the data until it confesses is “p-hacking,” a reference to the p-value, a measure of statistical significance. P-hacking is also known as overfitting, data-mining—or data-snooping, the coinage of Andrew Lo, director of MIT’s Laboratory of Financial Engineering. Says Lo: “The more you search over the past, the more likely it is you are going to find exotic patterns that you happen to like or focus on. Those patterns are least likely to repeat.” Bloomberg Businessweek, April 10, 2017. Lies, damn lies, and financial statistics.