Global Project Management? Further Away Can Be Better!

A study of a large global project showed that quality was not compromised because of the global nature of the effort. As many projects are global these days, that is a reassuring finding. I have often observed something a bit additional, however. In an organization that is not generally doing well, the further we were from the home office, the better we did. So if we want a successful project, make sure it is distributed and away from the central location?

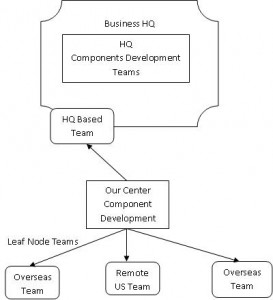

I was hired to project manage a part of the development of a high tech consumer product that was to be sold worldwide. We had gone from a single site development project (we were, in turn, just a component in an already global effort) to having multiple sites to manage. The VP whom I worked for said he had no problem managing the effort locally, but he needed help managing the four new remote teams that now came under his responsibility. Our sites consisted of a team at the main headquarters (HQ) site, the team at our local development Center site and three “leaf node” teams — two in other countries.

I was hired to project manage a part of the development of a high tech consumer product that was to be sold worldwide. We had gone from a single site development project (we were, in turn, just a component in an already global effort) to having multiple sites to manage. The VP whom I worked for said he had no problem managing the effort locally, but he needed help managing the four new remote teams that now came under his responsibility. Our sites consisted of a team at the main headquarters (HQ) site, the team at our local development Center site and three “leaf node” teams — two in other countries.

No problem. While they were far away, in different time zones, with different languages, and with different cultures, this should not be too hard. I had worked multinational efforts for the past 20 years, and while they were sometimes distributed, generally we were all working in close proximity.

To help in this new development, we had all the standard tools of e-mail, teleconferencing capabilities and an intranet that allowed us to easily communicate. Our defect tracking system was global. When someone entered a defect, everyone would soon see the same thing. Our feature and project management tracking systems were not as distributed. Information could be posted and viewed by everyone on the intranet, but the system itself did not directly help with making things available worldwide. It looked like a technical challenge to set up good communications which I was sure I could do. Otherwise, it was just good software development management 101. If only it were that easy.

The first thing I did as the new manager was to take the existing component project plan and contact all the other managers, introduce myself, and ask them where they were on the delivering the software we needed for our component. The managers I contacted included the other components, most of which were at the main HQ site, and our teams distributed as I indicated above.

When I called each manager, I got — eerily — the same response. They were very nice and welcoming. They expressed polite surprise over the commitment I claimed they had. They said they had made no such commitment. Oh boy. I didn’t even have a real plan to pull folks together, yet I had to report status against this plan to the corporate VP by the end of the month. It turned out that this plan was not worth much, but it was the official plan, and everyone eventually just said “yeah, yeah, OK — we’ll do it.” Not having a good schedule it seems was only part of the problem (see also Get The Schedule Right – Project Management Tools).

I captured status daily and updated our globally available project plan and schedule using Microsoft Project. That was not a big effort as stuff happened daily: things got done or things went to heck. This daily update was often unpopular as folks wanted to hold back on reporting until the problems were solved. The senior level managers I worked with regularly gave me a range of generic noncommittal answers. Luckily, when I talked directly to the software development leads, I got real information on where things stood. However, I noticed over time a troubling pattern that was a bit beyond even the unrealistic schedule we were following.

When I talked with the senior level manager at the main site, which was three hours north of where I was, I usually got rather caustic statements that everything is going OK. He also demanded on knowing if things elsewhere were going well or not. This particular individual was clearly assuming that everything remote from the HQ site must be going badly. In fact, he would often state these remote sites were the reasons his team was not getting things done as fast as they could, but his team would deliver — in spite of it — on time. He would also tell me I needed to spent more time with each of these remote sites, and less with his team, because that was where the real challenges were.

When I talked with the senior level manager at the main site, which was three hours north of where I was, I usually got rather caustic statements that everything is going OK. He also demanded on knowing if things elsewhere were going well or not. This particular individual was clearly assuming that everything remote from the HQ site must be going badly. In fact, he would often state these remote sites were the reasons his team was not getting things done as fast as they could, but his team would deliver — in spite of it — on time. He would also tell me I needed to spent more time with each of these remote sites, and less with his team, because that was where the real challenges were.

At my own site, the center for our component development, we had something similar. We were way behind in producing the architecture document and APIs (the way other components talks to our component). The local senior manager was getting annoyed that the other sites kept asking for these. The leaf node sites, those that were most remote and in other countries, had a hard time making any progress without the needed information. Generally the leaf node teams settled into an approach of making assumptions, strategizing how they would adjust them once real direction came, and pressing on doing the best they could against the deadlines they had been given.

What happened in this confusion and chaos? Everyone delivered their software for the first scheduled delivery. The HQ team’s software did not even compile successfully (i.e., had no chance at all of working). After a bit of pointed questioning, the software lead of the HQ team admitted that his senior manager told him to just give us whatever he had — no matter the state of it. It seems his senior manager assumed no one else would have anything and so essentially tried to bluff by submitting code and assuming everything was so bad that no one would notice. The leaf node teams all submitted working software based upon their published assumptions. However, they would not work with the architecture and APIs that our center site just completed and delivered. Our local center site senior manager pointed at the other teams and said “they didn’t use the APIs correctly” which were published very late which is why nothing worked together. What a mess.

This cycle of delivering code to integrate into our component continued regularly over the next months. While the software slowly got better with time, the pattern seen on the first delivery was consistent and came into greater clarity.

This cycle of delivering code to integrate into our component continued regularly over the next months. While the software slowly got better with time, the pattern seen on the first delivery was consistent and came into greater clarity.

1. The team at the main HQ site was the least communicative and the least cooperative. The senior manager there continuously challenged what everyone else was doing and highlighting the problems in other sites’ software. The curious end of the story for this team was the senior manager was eventually removed from his job. Once he was gone, this HQ team became one of our better and more responsive teams (they also reported directly to me for awhile). But until that time, they were hands down the least effective team. It appeared to be that way because of the constant other demands placed on them and their senior manager by the schedule induced chaos at the HQ.

2. Our center component site was suppose to pull everything together technically and architecturally. However, their senior manager essentially stopped talking to me. This senior manager would regularly produce his own status of what everyone was doing (because everyone had to work with them through the architecture and for software builds). This status would contrast starkly with my official status. The VP I worked for once told me I had better sync my status with the local senior manager. My response was the local senior manager needed to be providing me with his updates and not trying to report his own self serving status. Since I talked directly to all the software development leads I had the accurate details. These details were not as optimistic as everyone wanted to present them. The center senior manager largely made up a good story each time that generally alluded to how other organizations were the reason we were behind. Somewhat humorously, I came to find out that the official plan I was trying to execute was originally created by this same senior manager before I came on board. He had made up this plan (i.e., neither coordinated nor committed with the other managers). It had gone forward and became the official plan because it fit the overall schedule. In his defense, all the other component senior managers had done something similar to fit into the required schedule. (For more on this see Honesty Is Just More Efficient – Project Management Tools).

3. The leaf node sites all delivered their software as they promised based upon the assumptions they publicly made. They had to rework their software numerous times to get it to work with the evolving architecture, but it was always software that we could rely upon. At one point another component senior manager expressed how he would love to be far enough along to use the working functionality that one of our leaf node teams had demonstrated.

I took away a general rule from this experience that remained true, at this organization, for almost the entire time I worked there. The rule was that the further the development team was from the HQ site, the better the team performed as to schedule and quality. This was apparently due to the fact that these more remote organizations were less impacted by all the chaos at the HQ. At the HQ, things could change in a moment by a conversation over a cube wall or in a corridor. That change could then change again several times throughout the day and week. These changes didn’t propagate to the remote sites very quickly. In fact, most of the changes became non-issues or changed again, so the remote site never got the brunt of the thrashing. The remote sites also worked harder at communicating what they were doing and fighting for feedback. The HQ and our center often refrained from communicating a change because “it isn’t finalized yet.” The leaf nodes seemed to do what they did both because they were remote, and because of language, time zone and culture differences. They seemed to know they needed to constantly ask questions, regularly confirm what they already knew, and report on what they were doing. When needed information was not forthcoming, they would make an assumption locally, tell the world what they were doing, and then press on.

The notion that distributed software development was inherently “riskier and more challenging than collocated development” was recently challenged in Communications of the ACM, August 2009, “Does Distributed Development Affect Software Quality? An Empirical Case Study of Windows Vista.” They went on to say “Based upon earlier work, our study shows that organizational differences are much stronger indicators of quality than geography.” Our experience on this high tech product development aligned well with this notion and further suggested that the more remote the location, the better the development quality. Keep in mind that this organization had significant problems in the first place in the form of a completely unrealistic development schedule. In this context one could readily see the advantages and strengths of being a remote development site. An organization without as significant an issue, might not as readily see these factors.

If your organization is having significant issues, it might make sense to do project development of that new killer product in a remote location to keep it away from all the nuttiness of the problems at the main office.

7 thoughts on “Global Project Management? Further Away Can Be Better!”

Comments are closed.

MK Randall,

The rest of the story looks like:

http://pmtoolsthatwork.com/honesty-efficient/

But we eventually sorted it out, years later, and had some great project success – but a bit too late to keep the company from shrinking significantly.

In this case, the real secret was not a highly integrated and faithful MS Project Plan (we did that too) nor disciplined requirements management (ditto) nor disciplined configuration/build management (ditto), performance, testing, etc..

The secret — that jumped us to on time with good quality — was getting a schedule that was realistic. The rest of the disciplines we already had in place, but they were unable to make any difference when colliding with compressed schedules that shredded the effects of any good practices.

We didn’t take any longer to produce the product than had taken in the past, we just allocated the full time from the beginning and then let people do their jobs. All those good practices suddenly started to make a difference – since we could now do them as intended.

The solution that worked for us was:

http://pmtoolsthatwork.com/get-schedule-right/

Good inputs.

The story is familiar: Inadequate Requirements Management; Top down schedule commitments or commitments being made without adequate requiments analysis, design and sizing by the people who produce the code modules; resistance to open reporting etc. Been there; done that.

Now for some questions:

*How did you track progress vs. plan?

*What was the granularity of interim milestones that were tracked? i.e. months? weeks?

*Was the plannning done on a duration or effort basis or both?

*Did each project use MS Project faithfully?

*Did you use metrics like Schedule Performce Index or Cost Performance Index or both?

*Was MS Project robust enough to deal with the multiple small projects that contributed to the overall project/product/program or whatever you called it?

*How often were the individual MS Project plans “harvested” into an overall plan with projected completion dates recalculated?

*How often were there replans with supporting MS Project plans to support the replan?

*How were defects categorized, tracked and measured? Were teams rewarded for discovering defects early in the product development cycle? i.g. requirements; high level design; detailed design etc?

*If the schedule was fixed, was product content/funcitionality a variable?

*How were interdependencies between different teams/groups identified/documented? committed to? managed?

*Did you have the support of your senior management who had authority over other locations?

*Were some of the code modules produced by outside companies that you contracted with? If so was managing those “projects” differenent?

*How often were code modules form various groups integrated into the “mainline” product under develpment?

*What were the integration requirements?

*How were inididual code moduels verified? Inspections? Unit Testing?

*At what point was there an operating kernel/mainline that could be regression tested?

*How often were regression tests run?

*How long did it take to restore stability to the mainline once a regression was found. i.e. How long to fix the faulty changes?

*Were there any performance/speed requirements? If so, how were they tracked? How often would preformance regression test run?

*Was there a consistent code change management system in use by all teams? Could changes be backed out easily.

*Did teams submit changes to mainline code or did they “replace” entire code modules as a means of integrating changes?

These are the type of things I dealt with when managing Software projects and programs a number of years ago. So perhaps project managment technology has changed significantly since then. But the story was so familiar, it makes me wonder if much has changed at all. I sometimes think at Measurement and Analysis is on seriously done in Govt. projects and I wonder about those sometimes. And, of course, there are always a few leading edge non-govt organizations. However, I continually see too much high testoserone top down management to suit me.

Idan Roth, worked for Amdocs and completing his PhD these days on this subject. You might want to contact him.